Today, Air Force Research Lab (AFRL) and IBM announce the development of a new Scale-out, Scale-up Synaptic Supercomputer (NS16e-4) that builds on previous NS16e system for LLNL. Over the last six years, IBM has expanded the number of neurons per system from 256 to more than 64 million – an 800 percent annual increase over six years!

Don’t miss the story in TechCrunch and a video.

Enclosed below is a perspective from my colleagues.

Guest Blog by Bill Risk, Camillo Sassano, Mike DeBole, Ben Shaw, Aaron Cox, and Kevin Schultz.

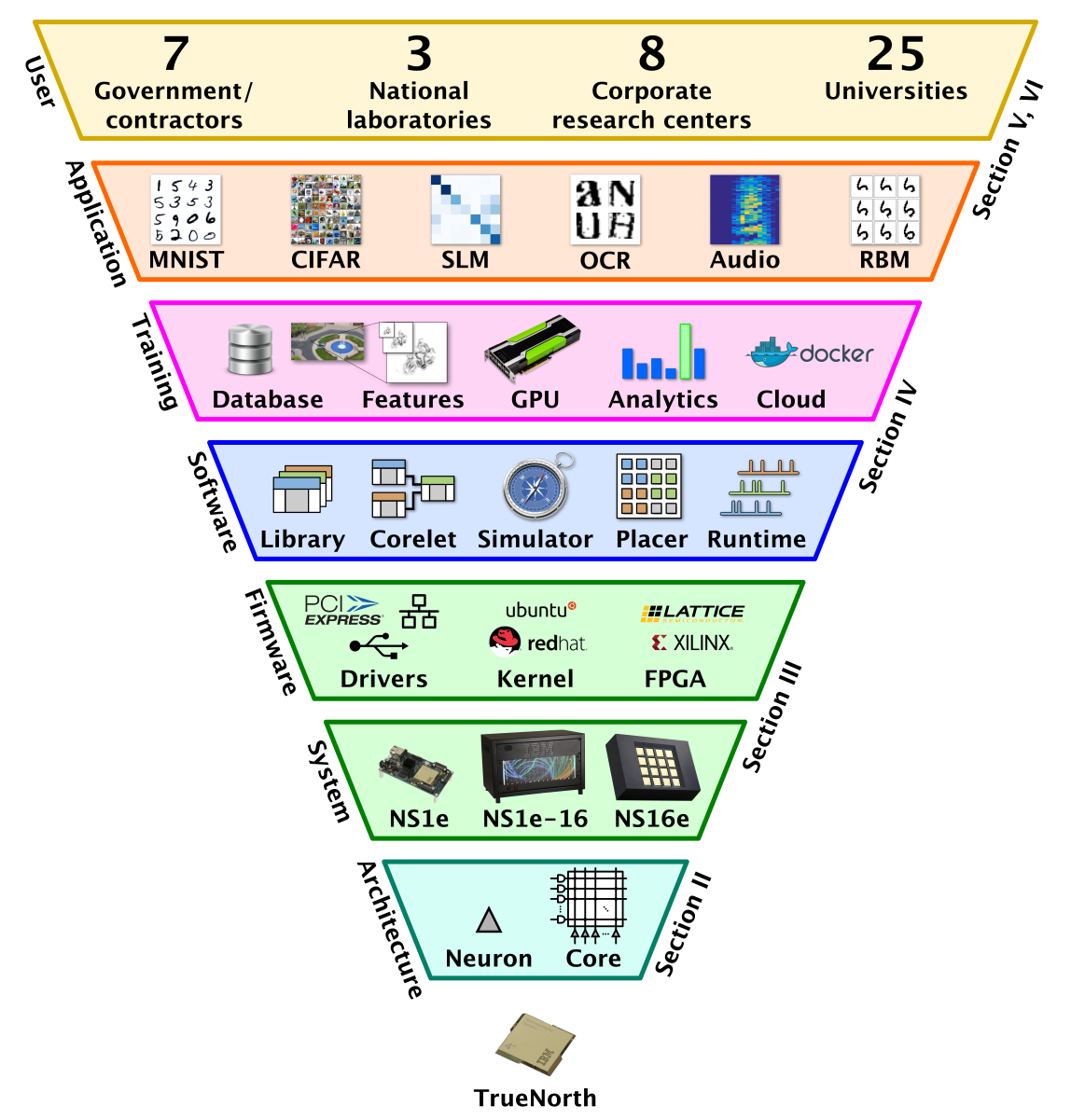

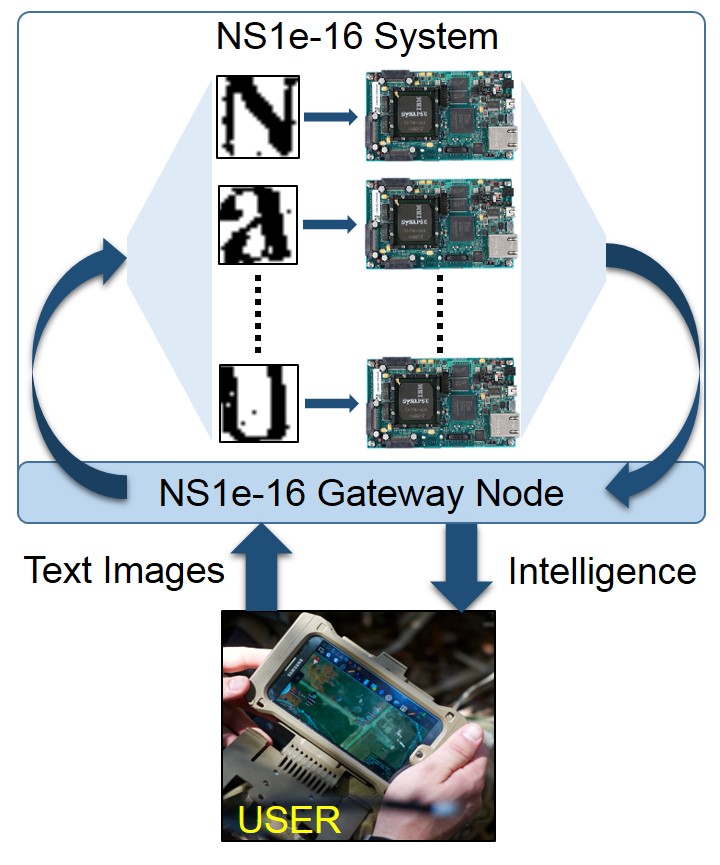

The IBM TrueNorth Neurosynaptic System NS16e-4 is the latest hardware innovation designed to exploit the capabilities of the TrueNorth chip. Through a combination of “scaling out” and “scaling up,” we now have increased the size of TrueNorth-based neurosynaptic systems by 64x from the original single-chip systems (Figure 1).

Figure 1. Scale-out and scale-up of systems based on the TrueNorth chip.

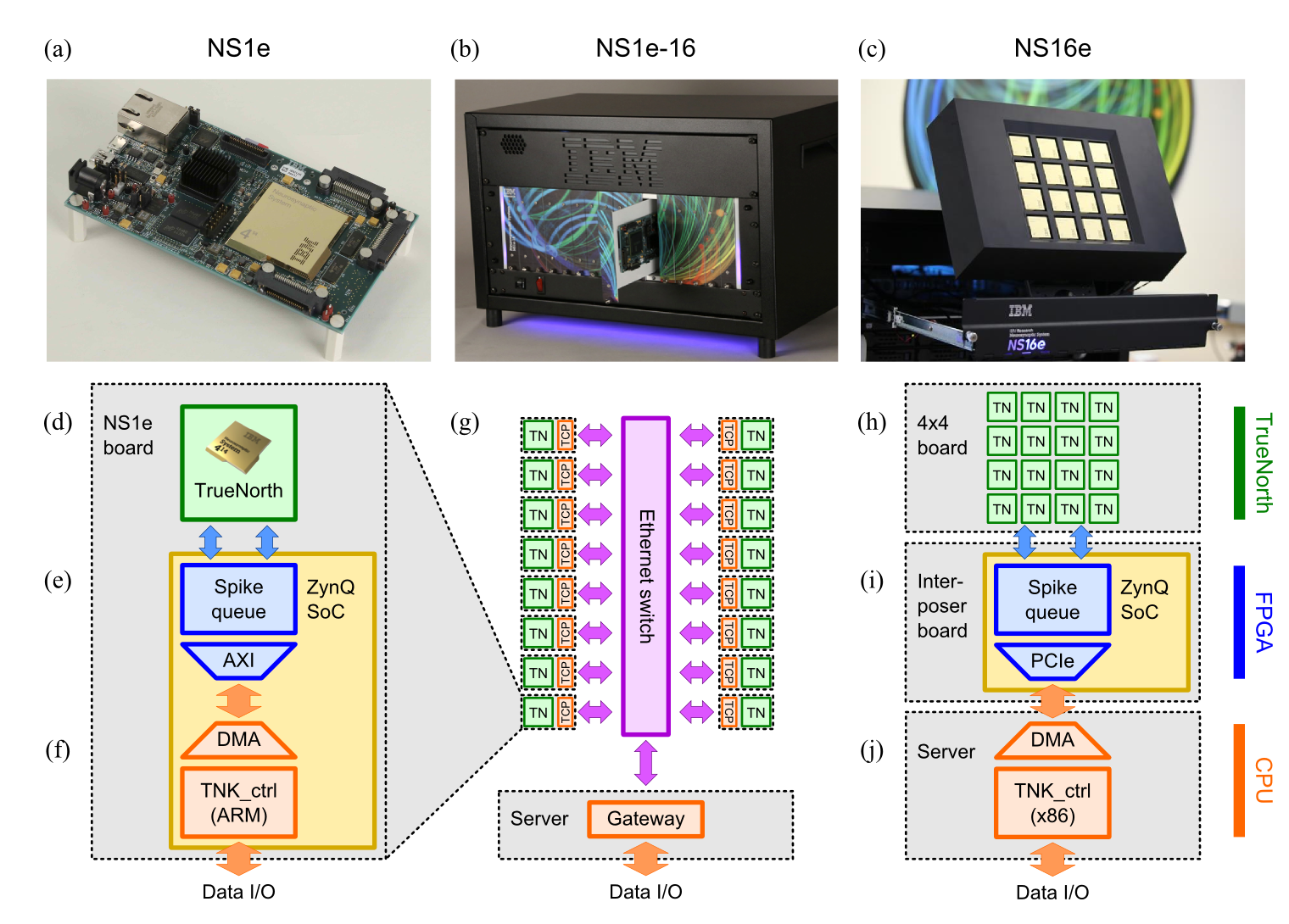

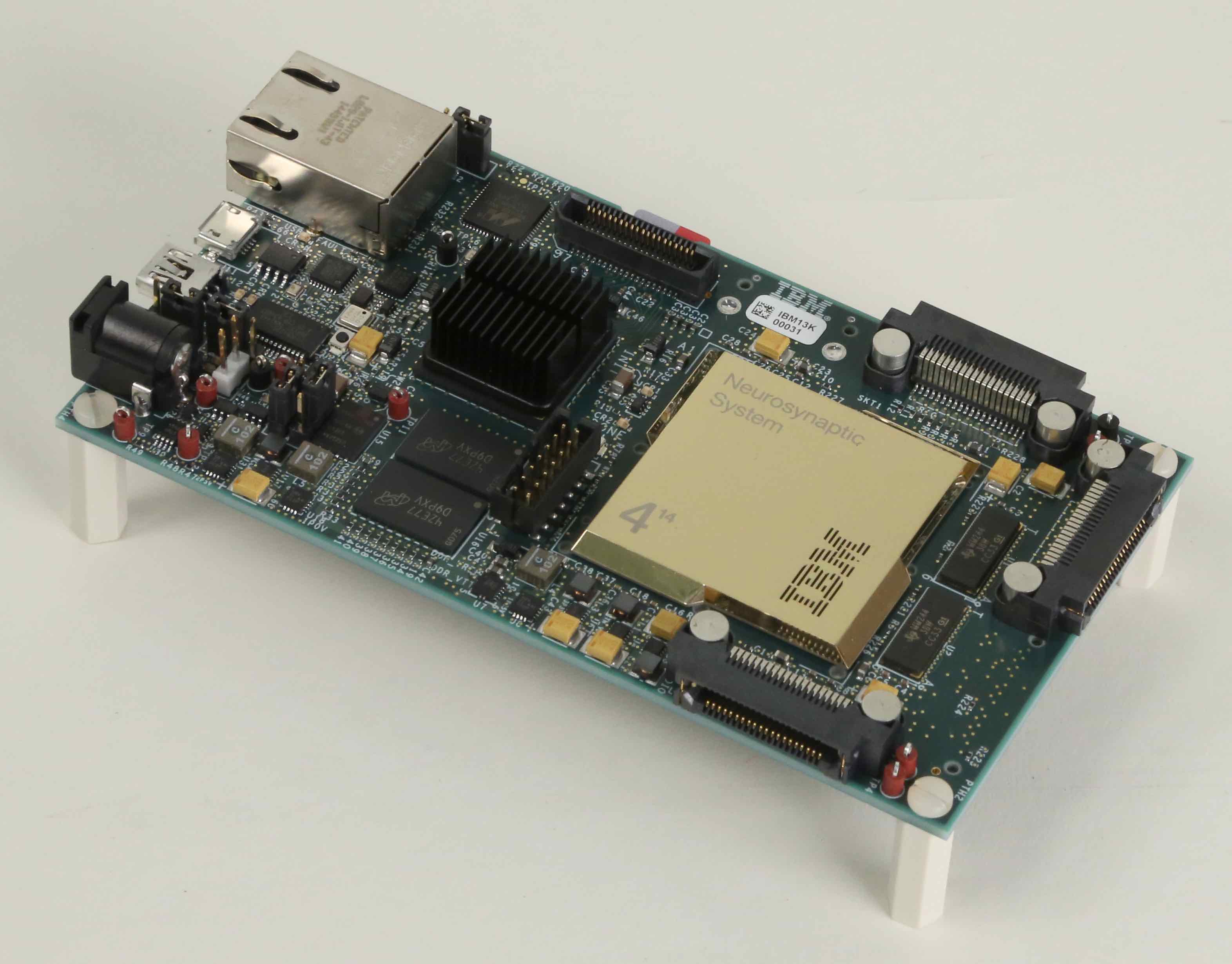

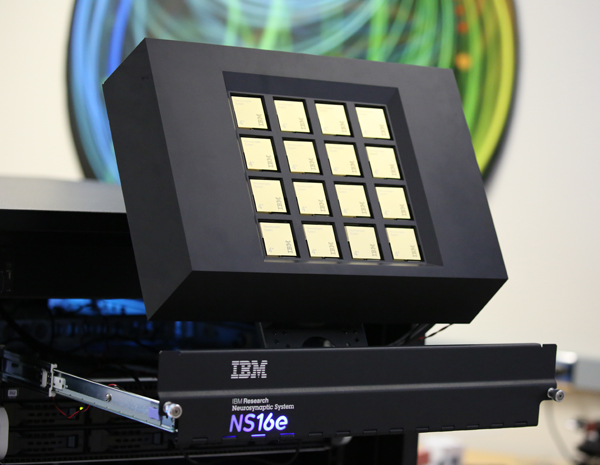

The NS1e board included a single TrueNorth chip and was designed to jump start learning about and using the TrueNorth system. The first “scale out” step—the NS1e-16— put sixteen of these boards in a single enclosure, which permitted running multiple jobs in parallel. The first “scale up” system—the NS16e—exploited the built-in ability of the TrueNorth chip to tile seamlessly and communicate directly with other TrueNorth chips. Where both the NS1e-16 and NS16e offered the same number of neurons and synapses, the NS1e-16 was essentially 16 separate 1-million neuron systems working in parallel, while the NS16e was a single 16-million neuron system, allowing the exploration of substantially larger neurosynaptic networks. The NS16e-4 is the next step in this evolution, bringing four NS16e systems together in parallel to provide 64 million neurons and 16 billion synapses in a single enclosure.

As announced today, the U.S. Air Force Research Lab has ordered the first NS16e-4 system. To deliver this system, we needed to devise a way to put four NS16e systems in the same enclosure, subject to the following constraints:

- It must fit in a 4U-high (7”) by 29” deep enclosure that can be mounted in a standard equipment rack

- All related components required by the system—power supplies, cabling, connectors, etc., (other than a separate 2U server that acts as a gateway for the system)—must be contained within the same 4U space

- It must be possible to easily remove each NS16e sub-system so that it can be serviced, transferred to a different identical enclosure, or used independently as a standalone system

Given the size of the previous enclosure, it was not feasible to simply put four NS16e systems in a 4U-high box and meet these constraints. Instead, we first had to redesign some aspects of the NS16e circuit boards to permit a more compact form factor. In concert, we redesigned the NS16e case to match the new form factor, while retaining the original design’s signature angular shapes.

This smaller form factor allowed us to consider several different ways that four NS16e sub-systems could be efficiently placed in the allotted space. The one we settled on places them in a unique V-shaped arrangement in a drawer (Figure 2a), which, when extended, provides easy access to the individual NS16e sub-systems. Empty spaces under the V provide room to route cables and move air for ventilation. A docking structure (not visible in the Figure 2) holds the NS16e’s in place when in use, provides power and signal connections, and permits them to be released and removed when necessary. This arrangement provides a view of all 64 TrueNorth chips. A transparent window and interior accent lighting permit the chips to be seen even when the drawer is closed. (Figure 2b). Multiple NS16e-4 systems can be placed in the same rack; the novel front panel shape creates an intriguing 3D geometric pattern (Figure 3).

Figure 2a. NS16e-4 system with drawer open.

Figure 2b. NS16e-4 system with drawer closed.

Meeting all the technical requirements required creative industrial design. We designed for utility, but were pleased that a measure of beauty and elegance emerged through the design process. We now look forward to building and delivering it!

Figure 3. Two 4U-high NS16e-4 systems stacked above a 2U-high server.

The following timeline provides context for today’s milestone in terms of the continued evolution of our project.

Illustration Credit: William Risk